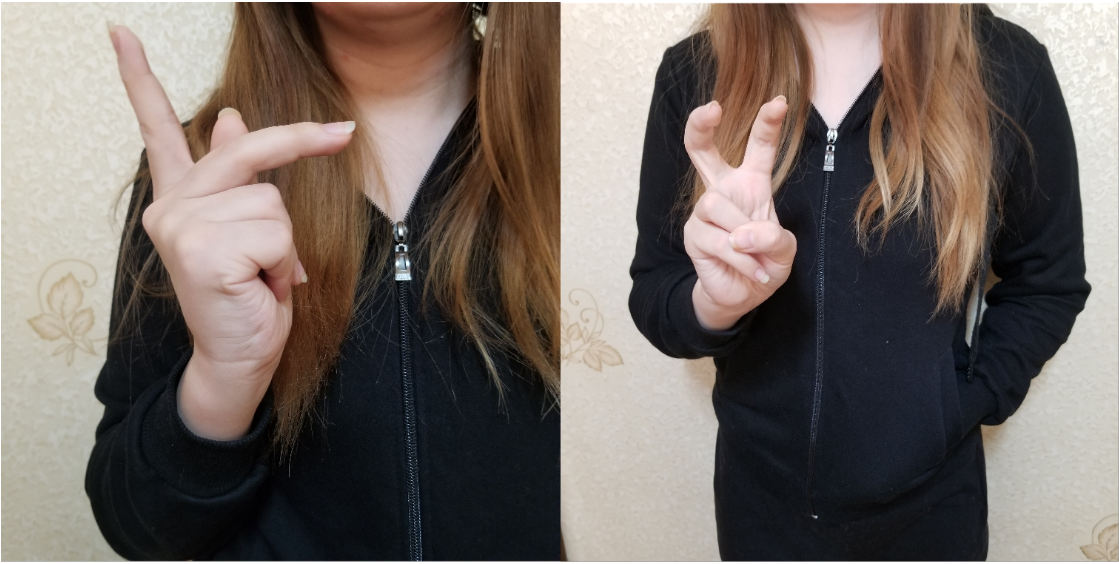

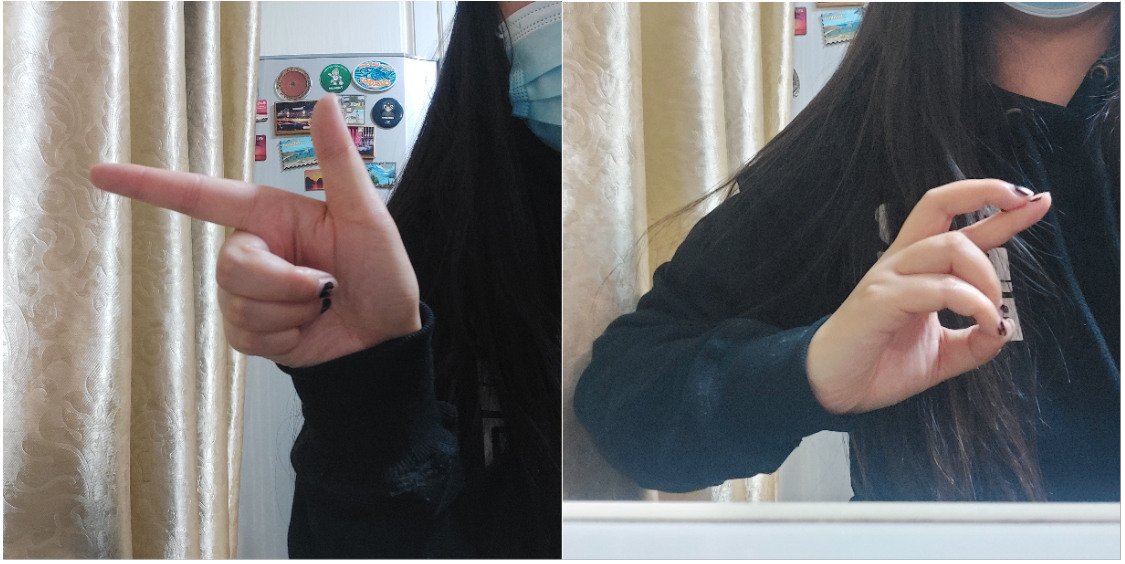

It is complicated for the people with speech impairment to make a relationship with social majority, which naturally demands an automatic sign language translation systems. There are plenty of proposed methods and datasets for English sign language, but it is very limited for Arabic sign language. Therefore, we present our collected and labeled Arabic Sign Language Alphabet Dataset (ASLAD) consisting of 14400 images of 32 signs (offered here) collected from 50 people. We provide the analysis of performance of state-of-theart object detection models on ASLAD. Moreover, the successful real-time detection of Arabic signs are achieved on the sample videos from YouTube. Finally, we believe, ASLAD will offer an opportunity to experiment and develop automated systems for the people with hearing disabilities using computer vision and deep learning models.